By Mrinmoy Das

As a solution architect, the main task at hand is to understand the market and deliver products per its highest standard.

Creating a dynamic ETL tool like OneDATA.Plus is a challenge in itself. Nonetheless, building a system that is reliable, has superior performance, and is entirely robust, is a task we are determined to solve. Additionally, we need to keep security at the forefront of our minds, as that is how we can ask our customers to rely on us with their data.

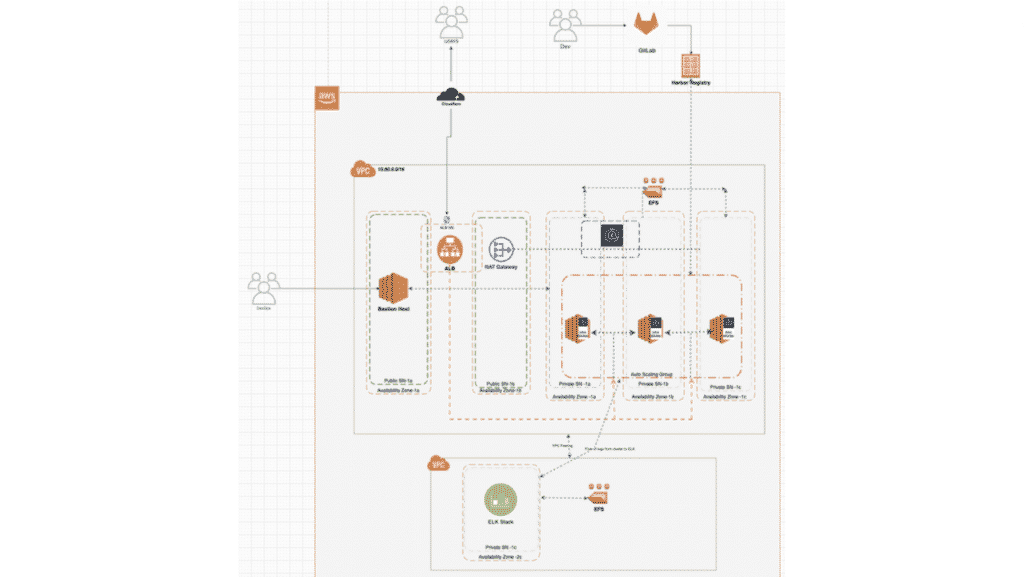

Leveraging free and open-source software to build and create an architecture to serve all its needs is something we have accomplished as a team with OneDATA.Plus (see Exhibit 1). Being a Solution Architect, it is my task to ensure that the service we provide to the customer is up to par and that there is no compromise on quality.

The approach we went with is called pre-mortem. Just like in post-mortem, we try to understand what went wrong after the event. In pre-mortem, we list down all the necessary tools required to build our system. When talking about self-managed architecture, there is nothing better than Kubernetes. Coming from a smaller footprint, utilization of resources has been a crucial need of our organization. Bringing up servers only when they are needed has helped us save an enormous number of resources down the line. Our architecture consists of servers ranging from 15 to 25 depending on the request provided by our customers. The system can scale up and down when required without the need for human intervention. Using servers of the latest series has helped BDIPlus achieve this quite easily.

Our system is comprised of two pieces of the internet, a public one and a private one. The public subnet has servers that help us build our Docker images for our application code, including a machine hosting Pritunl giving access to all services in the environment to the developer’s local system, and a Bastion host which paves the only entry point into our system. While the Bastion is not a part of the main Kubernetes cluster, access to the cluster to any outside developer or system engineer is through that. The main reason in building the system around this was based on our security concerns. When we detect that we are being intruded on, we close off the bastion so that the rest of the system, the main cluster, keeps functioning within the private subnet without it being affected by it. The installation of WAF as a firewall to our load balancer also helps us prevent any DDoS attacks, blocking the intruder in any attempt to compromise our system.

Data retention is one of the main concerns of any system. OneDATA.Plus’s application, although it runs on the nodes of the Kubernetes cluster, has data storage in AWS EFS distributed across multiple availability zones. This helps us during times of disaster recovery. The entire system is brought into action immediately into another region during times of a disaster, and it attaches itself to the EFS for the data to be retained.

The application is rather simple. Any input provided by our customer is captured and stored in the database (see Exhibit 2). Now the database has become the producer, and the consumer is the heart of the application which is what we call the Event Engine. KubeMQ provides the feature to transfer a million messages through the queue to the consumer. The Event Engine then processes the information and runs individual services to be discovered within our service mesh. These are individual services performing a part of the entire workflow. We have attached a horizontal pod autoscaler within the services for the application to scale during execution. This helps us process a lot of requests without compromising the quality we provide to our customers. All communication is through RESTful services and internal to the Kubernetes mesh network. Since the architecture hosted is a microservice one, we are not bothered about the programming language used to create the application as long as it can serve the notation of RESTful services. Languages like Python, Java Spring Boot, NodeJS, and ReactJS are highly escalated to ensure the functioning of the application. With support of SDKs by various cloud providers, the network plane distributed across multiple clouds can be easily attained.

A free tool used to provide a layer of authentication to our external facing APIs is Kong. A simple software that can be deployed and added in front of any service to accommodate OAuth, OAuth2 to RESTful services.

Using Infrastructure as code utilizing Boto3, Paramiko, Terraform, and Pulumi, we can create any number of servers required by our end users. These creations do not have to be restricted to any particular cloud, but across all major providers in the market including AWS, Azure, and GCP.

One of the key factors that needs to be kept in mind is not just the creation of a system of scale like OneDATA.Plus, but also the efforts spent maintaining it. To enable easy maintenance of the system, the monitoring stack has been deployed in another VPC that is partnered with the main one. The VPC holds an EFK, namely ElasticSearch, Fluentd and Kibana combination to monitor any logs coming in. The filebeats are configured in such a way that they send the EFK details about the Kubernetes workflow. A glance at the dashboard helps team awareness regarding the workability of the system.

Combining open-source and free software into a single unit (see Exhibit 3) gives rise to a system that is powerful enough to serve 2,000 requests every second. Combination of free tools makes it possible to achieve a milestone to give rise to a dynamic ETL tool of such caliber.

A pipeline of dataflow, a combination of open-source tools, escalated attention to security all come together to create a tool like OneDATA.Plus – helping thousands of people transform their data in real time to make their business outcomes provide fruitful results. With the correct motivation, building a system that is self-managed and reliable is quite easy to do.